Introduction

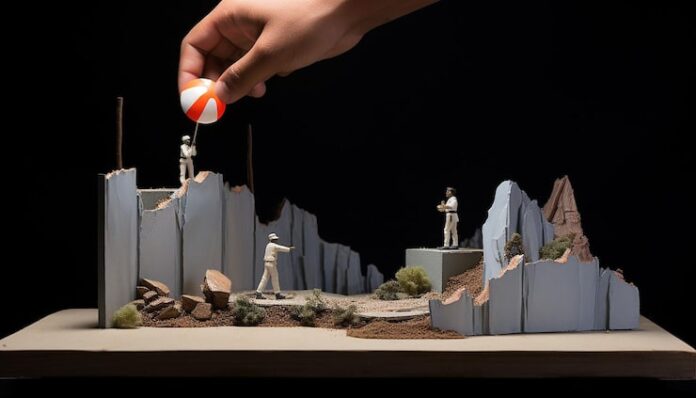

Imagine two artists standing in a studio. One sketches carefully, adding faint lines, erasing imperfections and refining shapes until the final portrait captures both realism and imagination. The other artist paints boldly with strong strokes, competing with an inner critic who constantly challenges every color choice and brush movement. These two creative journeys mirror the design philosophies of Variational Autoencoders and Generative Adversarial Networks. Both models generate new data, yet the way they learn, create and refine their work differs dramatically. These differences often become clearer during a Data Science Course, where learners explore how creativity and mathematics blend inside generative models.

Deep generative models offer a window into how machines imagine. Understanding their architecture reveals why they produce different kinds of creativity.

The World of Variational Autoencoders: The Thoughtful Storyteller

A Variational Autoencoder can be imagined as a storyteller who constructs narratives by understanding the structure of past experiences. It does not simply memorize examples. It learns the deeper patterns of the data and expresses them through a latent space. This latent space acts like a library of abstract ideas from which new stories can be created.

A VAE consists of two key components. The encoder compresses the input into a compact representation while the decoder reconstructs the data from that compressed form. The magic lies in the fact that the latent space is probabilistic. Instead of fixed points, it represents distributions. This allows the model to introduce controlled randomness, generating variations of the learned patterns.

The VAE trains by minimizing reconstruction error while ensuring that the latent space follows a smooth distribution. This gentle balance produces realistic outputs while keeping the latent space organized and navigable.

The World of Generative Adversarial Networks: The Creative Rivalry

A Generative Adversarial Network functions like a fierce artistic duel between two rivals. One artist creates new works while the other attempts to identify flaws. The first artist is the generator and the critic is the discriminator. The generator tries to create data that looks real while the discriminator tries to distinguish between real and generated samples.

This rivalry drives rapid improvement. Whenever the critic catches a flaw, the creator adjusts its technique. Whenever the creator becomes more convincing, the critic heightens its scrutiny. This competitive learning process is what gives GANs their reputation for producing exceptionally realistic images, audio samples and synthetic data.

However, this rivalry can also cause instability. If one side becomes too strong, the training process can collapse. This tension transforms GAN training into both an art form and a mathematical challenge explored by learners in a data scientist course in hyderabad, where balancing adversarial forces becomes a central theme.

Architectural Differences: How the Two Worlds Create in Different Ways

The architectural differences between VAEs and GANs shape the nature of their outputs. VAEs rely on reconstruction based learning. They observe data, encode it to the latent space and then decode it. Their training objective ensures that generated samples resemble real data but also follow a continuous and meaningful latent structure. This structure allows smooth interpolation between samples.

GANs, on the other hand, do not reconstruct. They generate new samples directly from random noise. Their learning objective is adversarial. Instead of minimizing reconstruction error, they minimize the ability of the discriminator to distinguish fake from real. This lack of direct reconstruction often leads GANs to produce sharper and more detailed outputs than VAEs.

VAEs are organized, structured and stable. GANs are bold, expressive and often unpredictable. These architectural traits influence which model is chosen for which application.

Latent Spaces: Ordered Knowledge versus Creative Freedom

A VAE latent space is like a carefully arranged museum where each section represents meaningful variations of the input data. Moving through this space feels smooth and interpretable. You can adjust attributes gradually, making VAEs ideal for tasks where controlled generation matters, such as image editing or feature analysis.

A GAN latent space is more expressive but less structured. It is like a vibrant art market filled with spontaneous creativity. You can stumble upon breathtaking works but navigating this market is less predictable. GANs excel in producing visually stunning and diverse samples, though controlling specific attributes can be more challenging.

The contrast between order and freedom illustrates why different industries choose one model over the other depending on the level of interpretability or realism required.

Applications Shaped by Architecture: Where Each Model Excels

Because VAEs produce smooth and interpretable latent spaces, they are widely used in anomaly detection, image reconstruction, representation learning and synthetic data generation where structure matters. Their stability makes them a reliable choice for scientific and industrial applications.

GANs dominate areas that demand high quality and visually rich outputs. They are used for image synthesis, video generation, style transfer and even audio creation. Their competitive learning mechanism results in crisp and lifelike samples.

Understanding these application differences helps professionals decide which generative model aligns with their goals.

Conclusion

Variational Autoencoders and Generative Adversarial Networks represent two distinct artistic philosophies in the world of machine learning. One learns through introspection and reconstruction while the other grows through rivalry and competition. Their architectures define how they imagine new data and what qualities they bring into their creations.

These insights align with what learners explore in a Data Science Course, where architectural choices shape the future of artificial intelligence. Professionals trained in a data scientist course in hyderabad gain an appreciation for how generative models blend creativity, probability and computation.

Business Name: Data Science, Data Analyst and Business Analyst

Address: 8th Floor, Quadrant-2, Cyber Towers, Phase 2, HITEC City, Hyderabad, Telangana 500081

Phone: 095132 58911